Corporate treasury used to be a backstage crew role, quietly keeping liquidity flowing and making sure the business could fund its plans. But the rise of AI in banking and finance has dragged treasury into the spotlight, especially when it comes to risk. Suddenly, board members are expected to understand what algorithms are doing with cash forecasts, credit exposures, and hedging strategies. And most don’t know where to start.

There’s a strange mix of urgency and fog. On one hand, treasury teams are under pressure to get faster, more accurate, more predictive. On the other, there’s confusion over what AI actually is in this context. Is it just smarter spreadsheets? Does it replace decision-makers? Can it spot a risk before a human can? Everyone wants clarity, especially the board. They’re responsible if things go sideways, yet the tech talk can sound like wizardry.

This isn’t just about staying current. It’s about knowing what questions to ask, what risks to expect, and how to govern a treasury that’s being reshaped by machine learning. If you sit on a board, this is your brief, minus the tech hype and acronyms.

TL;DR: Quick brief for boardroom clarity

- Treasury risk is getting more complex as exposures move faster and markets react in real time.

- AI tools can help forecast cash, flag liquidity issues, and detect outlier behavior before it turns into a crisis.

- Many directors believe AI means full automation, but it’s often just improved pattern recognition with better data hygiene.

- AI doesn’t eliminate risk. It changes how risk is spotted, framed, and acted upon.

- Boards must understand where AI is used, who owns its output, and how it fits into the company’s risk appetite.

- Strong governance, not blind trust in tech, is the backbone of AI-powered treasury operations.

Not just robots doing math faster

Plenty of boardrooms carry an outdated image of AI as a black box that replaces people, but that’s not what’s happening in treasury. What AI does best here is help humans see better. Faster anomaly detection, tighter cash flow predictions, sharper insights into FX and interest rate risk. But the decisions? Still made by people.

Picture a scenario where a global firm is juggling multiple currencies, volatile rates, and supplier payments across time zones. Instead of waiting for a human to spot a gap in a spreadsheet, AI can flag an exposure spike within minutes. It’s not taking over; it’s alerting the right person faster.

That’s where boards need to shift thinking. The value isn’t about letting go of control. It’s about improving visibility and reaction time. AI isn’t a driver. It’s your headlights.

When the numbers don’t feel real anymore

Imagine sitting on the audit committee and seeing a quarterly report where cash positions shift by millions and no one’s quite sure why. Maybe a hedge moved early. Maybe a counterparty defaulted. Maybe it’s just a timing issue. This happens more than boards like to admit.

Now add in an AI system that tracks behavioral patterns in payment flows. One Thursday afternoon, it picks up a deviation: payments to a regional supplier are spiking and don’t match the usual cycle. Before treasury even runs their daily checks, the system has sent an alert.

That one anomaly ends up being a sign of a fraud attempt. A former contractor had accessed credentials. The loss? Zero. All because AI noticed something a person wouldn’t have flagged.

This isn’t fiction. It’s exactly the kind of quiet win boards don’t always hear about. But they should. It’s how AI in banking and finance is reshaping treasury: by making risk visible before it becomes a crisis.

Start asking smarter, sharper questions

Boards don’t need to be tech experts. They need to ask the right questions, the ones that reveal whether AI is working for the company or just adding flash with no value. Some good starters:

- What treasury functions are currently using AI?

- How is model performance tracked and updated?

- Who is responsible if AI makes a faulty recommendation?

- How does AI output align with our risk policy and tolerance?

- Are there scenarios where AI could create blind spots?

This isn’t about grilling the CFO or CTO. It’s about setting the tone for governance. AI doesn’t remove the board’s responsibility. It adds a layer of complexity that requires clear oversight and updated risk thinking.

People, not machines, still carry the weight

Here’s the big myth: AI will eventually make treasury autonomous. That belief has fueled a lot of bold claims. But treasury risk is full of judgment calls. Do we pre-fund that account in Argentina? Should we unwind that swap now or hold another week? These aren’t yes/no questions.

AI can offer better data, smarter alerts, and pattern recognition at speed. But it’s still the treasurer, the CFO, or the risk officer who has to make the call. Sometimes it’s based on gut feel, geopolitical insight, or knowing that a regulator in a certain region likes phone calls more than emails.

So, boards must stay human-focused. Training, responsibility, and communication matter more than algorithms. No machine can replace someone who knows why a number looks off or when to escalate a concern.

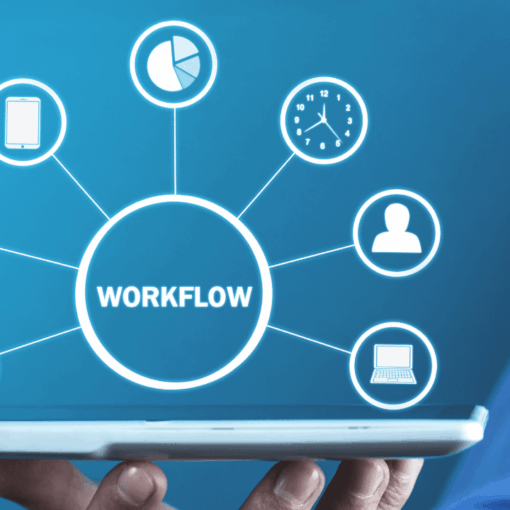

Different AI tools, different stakes

AI isn’t one thing. In treasury, it shows up in lots of forms, from robotic process automation to advanced neural networks. Each one has different levels of risk and return.

| AI Tool Type | Used For | Board Oversight Priority |

| Machine Learning Models | Cash forecasting, anomaly detection | Validate accuracy, question inputs |

| Natural Language Processing | Reading contracts, flagging risk terms | Check legal integration, model context limits |

| Robotic Process Automation | Routine payments, data transfers | Ensure audit trail and fallback plans |

| Predictive Analytics | Liquidity planning, market risk | Confirm assumptions, stress test scenarios |

Just because a system uses AI doesn’t mean it’s making decisions. The board’s role is to know where it’s used, what kind of AI it is, and whether controls are in place if it fails.

When in doubt, transparency wins

One Fortune 500 board chair described it this way: “We don’t need to know how the clock works. We need to know what time it is, who sets it, and how to fix it if it breaks.” That’s a solid mindset for managing AI in treasury.

The point isn’t to get everyone fluent in data science. It’s to demand clarity. If treasury starts using a predictive model to guide short-term debt decisions, someone on the board should be briefed on how confident that model is, what data it uses, and what could make it break.

And if those answers aren’t coming easily, it’s time to ask why.

Questions? Concerns? Plans to strengthen treasury?

Contact us. Our team helps boards understand treasury tech without the buzzwords. We work with directors, CFOs, and treasury leads to build governance models that scale with risk. Ask a question, start a conversation, or get help reviewing your current AI landscape.

Final notes for the boardroom playbook

- Treasury risk is moving faster, and AI helps keep pace.

- The board needs to know where AI is used and why.

- Human judgment still runs the show, even with smart tools.

- AI is a lens, not a driver. It improves visibility, not control.

- Oversight means asking better questions, not coding answers.

Boards don’t need to decode AI. They need to stay curious, stay engaged, and stay accountable. That’s how treasury risk gets smarter, not just faster.